Stealing Google's Coding Practices for Academia

I'm spending the year in Google's Visiting Faculty program. I had a few goals for my experience here:

(I'd love it if we made more progress as a community on standardizing our biblio handling. Gün probably had it right with CrossTeX -- perhaps we should all join in the fray?)

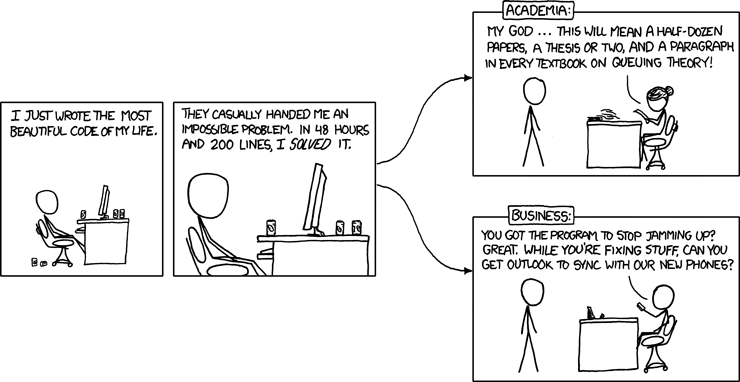

|

| From xkcd 378 |

- Learn learn learn! I hoped to get a different perspective from the inside of the largest collection of computing & distributed systems that the world has ever seen, and to learn enough about machine learning to think better about providing systems support for it. I haven't been disappointed.

- Do some real engineering. I spend most of my time as a faculty member teaching & mentoring my Ph.D. students in research. I love this - it's terribly fun and working with fantastic students is an incredibly rewarding experience. But I also get a lot of creative satisfaction from coding, and I can only carve out a bit of my faculty time to dedicate to it. I haven't written large amounts of production code since I was 21 - and the world has changed a lot since then.

- Contribute something useful to Google while I was here. They're paying my salary for the time I'm here, so I figure I shouldn't be a completely useless sponge that just spends all the time playing with their toys.

It's been a blast. I have learned a ton, and I've gotten to write quite a bit of code. As it turns out, I've been working on two relatively orthogonal programming-heavy projects: One with a research flavor aimed at scaling training deep neural networks better across more workers, and one with an engineering flavor aimed at cleaning up the TensorFlow code to better support really big tensors. (As Gates may or may not have famously noted, "Surely 2 billion entries in a Tensor is enough for anybody").

This latter engineering effort has focused on both handling big tensors and returning more semantically helpful error messages when programmers try to push too much stuff through ops that have been optimized by using, e.g., 32 bit pointers. (Particularly without Google's GPUCC compiler, used internally, using 64 bit indices for accessing tensors is quite slow in CUDA). I picked the cleanup job for a few reasons. First, I wanted to get a very hands-on feel for what was involved in making the TensorFlow (or mxnet, or any modern deep learning framework) code be "correct" -- I have an itch here that I hope to explore post-sabbatical that TensorFlow and the related systems are absolutely ripe for a more formal specification and code generation approach, and I wanted to get my hands dirty across a variety of the kernels to better understand the gross reality of lots of high-performance C++ code. And it scratches my itch of getting my feet dirty with real-world software engineering that's useful to people inside & outside of Google: You break TensorFlow, you break RankBrain and SmartReply. Fix bugs, everyone gets epsilon happier.

This latter engineering effort has focused on both handling big tensors and returning more semantically helpful error messages when programmers try to push too much stuff through ops that have been optimized by using, e.g., 32 bit pointers. (Particularly without Google's GPUCC compiler, used internally, using 64 bit indices for accessing tensors is quite slow in CUDA). I picked the cleanup job for a few reasons. First, I wanted to get a very hands-on feel for what was involved in making the TensorFlow (or mxnet, or any modern deep learning framework) code be "correct" -- I have an itch here that I hope to explore post-sabbatical that TensorFlow and the related systems are absolutely ripe for a more formal specification and code generation approach, and I wanted to get my hands dirty across a variety of the kernels to better understand the gross reality of lots of high-performance C++ code. And it scratches my itch of getting my feet dirty with real-world software engineering that's useful to people inside & outside of Google: You break TensorFlow, you break RankBrain and SmartReply. Fix bugs, everyone gets epsilon happier.

Stealing Ideas Back To Academia

One of my explicit goals was to steal ideas from Google that I could feed back into teaching and mentoring. There's a long-running discussion about academic code vs production code, and Google is widely viewed as having a well-orchestrated code review and style guide process, combined with very helpful tooling, that produces quite high quality code. My experience thus far agrees with this general perception -- I've gotten great and actionable feedback on the code I've written, which has turned up bugs that were missed by tests, observed cleaner ways to write it, and noted problems that it might create in the future. I'm sold, at least as the way code review is handled in the Brain group.

This leads immediately to the question:

What of this industrial approach can be profitably used in developing research code?1

Research & production code differ, and rightly so. In many, though not all, cases, research code quickly becomes abandonware. Fewer people work on it. These combine to make it less worthwhile to support the kind of extensive testing that most industry code uses. Testing, of course, serves a dual purpose of making sure the system works, and also of making it easier for someone to come along and make changes to it without wrecking everything.

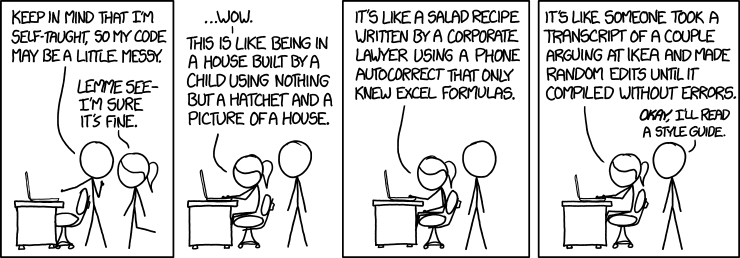

|

| Not the goal of learning from industry programming. |

I encourage my students at CMU quite strongly to release all of the code they develop, and to put a modest effort into making sure that someone else can run it later. But with the exception of libcuckoo, we rarely support them on an ongoing basis. So, without slowing down our ability to do research, or even speeding it up, what can we learn from our industrial friends?

1. Arbitrary and capricious style adherence.

Something I've seen both externally through the Go project, and internally at Google, is that mindlessly adhering to common formatting rules when the choices are approximately arbitrary, is a wonderful thing. When possible, mechanize it - as go does through gofmt. Other students and external people can come in and pick up your code with less cognitive load to get into the spirit of it, and it's easier to edit other people's stuff.

Suggestion: Just follow the Google Style Guides, since they're nice enough to publish them. (For Python, PEP8 is probably the best choice if they ever differ, for compatibility with the rest of the world. The Google style guide is mostly a superset of PEP8).

As part of your onboarding process, get students set up with the appropriate dotfiles that just Do The Right Thing out of the box. I've put a list in the next section. What? You're an academic research group that doesn't have a formal onboarding process? Yeah, neither does my group at CMU, and my time here has made me realize how important and useful it would be. That's going to be the subject of a later blog post.

2 Tooling, Tooling, Tooling.

Revision control: Standardize on a revision control framework and a code review framework for your group. GitHub is a natural choice if the educational discounts and free private repositories are sufficient for your needs. If you do a lot of data management, you may find that GH isn't sufficient, but we've moved all of our group's stuff to it to good effect. Key, though, is getting as much buy-in as possible from your students and other research groups around you. There's a network effect involved in this that's useful to exploit. It's also handy for students going on the industrial job market, since many employers like to see their GitHub profile. Free resume! Wahoo!

Auto-formatters: Make it thought-free to follow the silly style guide. Add the appropriate save hooks to your editors. This shouldn't take any of your brainpower, just automate it all.

- Go: gofmt

- Python: pyformat

- C/C++: clang-format

- Java: google-java-format

Compiler warnings, clang-tidy, pylint, etc.: They're a bit nitpicky, but I'm pretty sold on mandating a clean pass when submitting code. Use all of the C/C++ warnings, both -Wall and -Wextra. There's a bit of pain involved using them with rapidly-developing code, but it's offset by the extra bugs they catch.

- Go: go vet

- Python: pylint

- C/C++: clang-tidy (plus -Wall -Wextra, and on clang, -Weverything)

- Java: errorprone.info

I'm particularly fond of pylint these days, because it catches quite a few errors that would normally be caught by the type system in other languages, letting you find more bugs before you even run your python program.

Don't just tell people to use these tools: Integrate them as a mandatory part of the build and code management system.

Don't just tell people to use these tools: Integrate them as a mandatory part of the build and code management system.

Be forgiving when people want to temporarily disable the warnings. I'd be just fine with seeing a lot of #pylint disables in research code as long as it wasn't being abused.

Automate tests and experiments early on: One thing I tell my students is that they should aim, when possible, to be able to run their experiments with a single command, regenerate the data files and graphs with at most one more, and rebuild the paper. The same applies to tests, and it's probably low-overhead to get both automated from the very beginning.

I don't think that fully test-driven development is particularly necessary in academic code, but the right set of unit tests are great for rapidly iterating on your code, because they tell you when you broke something stupid. I have a hypothesis that better testing might make it substantially easier to have summer interns or short-term undergrads work in your group.

Hyeontaek Lim notes,

Automatic tests and experiments are very useful in accelerating rapid integration. It can produce the final evaluation results with a high level of robustness and reproducibility. The practice of using automatic test and experiments hinders bad habits of leaving uncommitted changes and manual configuration of systems that many people fall into while trying to get quick and dirty evaluation results.

I think this trap is particularly easy for people who've just come from undergrad, where lack of revision control and rapid hacking without a coherent development practice is the norm.

Bug tracking: Sounds odd for an academic project, but I suspect that a bug tracker might be a nicely transparent way of managing the big todo list that's always associated with a project in a shared way.

But: Your goal isn't to kill all the bugs. It's to do research. Be aggressive about pruning the bugs - use it as a GTD list that you can share with your colleagues more than a must-do list.

I don't feel as strongly about the importance of this for academic code, but if you think I'm wrong, comment below and let me know why!

[Priority 2]: Make it easy to use asan and tsan where applicable: If you introduce your {students, advisor, colleagues} to tsan and asan and they get it integrated into their tests at the time a project starts, it's a great source of extra information that doesn't come at a high cost. Me, I'd drop it like a hot potato if it started becoming a huge burden to maintain or was producing a lot of false positives, unless I was trying something very specific and tricky with concurrency. This starts to become a pain with multi-language projects, but the cost is low if you're purely in C/C++.

[Priority TBD] Continuous Integration systems are pretty awesome, but their utility may be higher for supported code: At Google and almost all code-quality-focused companies, a CI system is one of the backbone elements of development. Jenkins, which seems to be the standard open source answer, annoys me, but I do love finding bugs early. If it were lower-overhead, I'd do it as a matter of course, but I'm not convinced that the tooling available today provides as much benefit in the academic environment except for those projects that you decide to really transition via a supported OSS release. (Our libcuckoo work, or at the extreme end, projects like Spark.)

I should have something intelligent here to say about balancing local language expertise with using a language best suited for the job, but I don't. I'll leave this minefield for others to explore. :)

Having access to the compute resources necessary to run this stuff reliably and consistently can be a challenge in the often very ad-hoc academic environment. GitHub is a start, but as a community, we should put our heads together and identify ways to manage it better. Cloud options are probably the answer, but it can be difficult to overcome the academic illusion that it's cheaper to do it by ourselves. (It's not - we underprice our own and our students time!)

The same applies to writing papers. For example, our group has a common LaTeX paper skeleton framework (it's on GitHub, anyone's welcome to use it and contribute), primarily developed by Michael Kaminsky, that captures the essence of the ACM and Usenix templates but without the horrible legacy gloop. People may have their favorite tricks, but it's awesome as an advisor to be able to intuitively navigate all of my students' papers source.

3. Support Code Reviews, but thoughtfully.

Code review is shockingly awesome when done well. My personal experience with it thus far is that I had no clue how many bugs I introduced when writing code solo that I'd later have to spend more effort filtering out with testing & debugging.

A great code review mechanism in academic projects is pair programming. The last major coding I did in an academic project was the Data-Oriented Transfer (DOT) project, where Michael Kaminsky and I mostly pair-programmed our parts of it. It was a stellar experience - we wrote thousands of lines of often-intricate async code together that actually worked. (Yes, it's nasty 2005-era async network code; it would look drastically better if written using today's frameworks. But it was 2005, and it worked. :)

Unbeknownst to me, two of my students also pair programmed large parts of the first FAWN project prototypes, which is likely our group's most impactful overall research project to date. (I hadn't known this until I asked for feedback on this article!) Vijay noted:

Tool-based code review is easier in some ways, but adds challenges for academia. I've committed a bunch of changes to TensorFlow since it was open sourced (and since the machinery properly attributed commits to my GitHub handle). Each of those commits had to be "LGTM" (looks good to me) by someone within Google, before it could be submitted. I term this "gating" code review. Google's code review process is "lightweight", review tool-based code review - much like you'd do using a tool like Gerrit (the link has some explanation of the review workflow for those unfamiliar).

Lightweight tool-based code review is designed to provide benefits similar to pair programming in terms of extra eyes on the code, but without needing quite as close coupling. When writing code without someone else's eyes on it, it turns out that I introduce errors all the time that are subtle enough that they often get through tests. (Of course, that's biased - if they break the tests, I catch them before I try to submit them. :)

A great recent example: In this commit to TensorFlow, I changed the sample_distorted_bounding_box.cc op to return a polite error if you tried to resize an image with dimensions larger than could be stored in a 32 bit signed integer. But in the first version of the code that I submitted for review, I didn't use the FastBoundsCheck function that I've tried to use throughout my changes for consistency. (It also checks non-negative to catch any overflow-related weirdness.) My reviewer noticed this and asked about it. When I'd written the code initially, it hadn't compiled using that, because the kernel could be instantiated with float types for the size argument, and the boundscheck function was integer-only. I'd simply written a quick inline test instead that could handle floats. When I was asked in code review why I hadn't used the usual pattern, I realized that there was actually an underlying error: The function was spec'd to handle only integral types (and, indeed, that's what the docs said) but the kernel had been registered for all numeric types. Great find - the solution both kept the code the same as all other bounds check cases, and getting rid of the incorrect registrations (this line) saved compile time generating the unused floating point & double versions and reduced the code size of any generated TensorFlow program that used those ops. Code review can keep you honest.

(And in one of my recent commits, my reviewer spotted that I'd unintentionally shadowed a variable, which had caused a test failure that was only caught by the later run of the CI system, because it occurred only in GPU code and I'd forgotten to run the GPU tests locally. His sharp eyes saved me from having to debug the test failure -- he spotted exactly the problem, just by looking carefully for 10 minutes.)

But code review can also slow things down in the short term. (Like, 10 minutes short term. :). The truth is that most of the time "spent" is actually earned back -- perhaps with a multiplier -- in reduced debugging time. But there can be problems when reviewers take too long, and as I note below, with managing very rapidly changing research code where you want to get some stuff committed quickly and keep moving. While it's clear to me that there's huge value in code review, I might do it differently in academia.

Concrete suggestion: When there are multiple students working on a project, provide the infrastructure for them to do code reviews, but consider not making them "gatekeeping" reviews -- just advisory. (See, e.g., some organizational pitfalls of code reviews). In general, "lightweight" code review serves a few functions, and we should be careful to explicitly choose those functions we want in research:

A great code review mechanism in academic projects is pair programming. The last major coding I did in an academic project was the Data-Oriented Transfer (DOT) project, where Michael Kaminsky and I mostly pair-programmed our parts of it. It was a stellar experience - we wrote thousands of lines of often-intricate async code together that actually worked. (Yes, it's nasty 2005-era async network code; it would look drastically better if written using today's frameworks. But it was 2005, and it worked. :)

Unbeknownst to me, two of my students also pair programmed large parts of the first FAWN project prototypes, which is likely our group's most impactful overall research project to date. (I hadn't known this until I asked for feedback on this article!) Vijay noted:

I really enjoyed peer coding with Amar -- we only got FAWN stuff done so quickly because we were working on it together (both design, algorithms, and implementation).But, of course, effective pair programming requires a lot of soft skills and compatible pairs. I'm not going to pretend that this solution works everywhere.

Tool-based code review is easier in some ways, but adds challenges for academia. I've committed a bunch of changes to TensorFlow since it was open sourced (and since the machinery properly attributed commits to my GitHub handle). Each of those commits had to be "LGTM" (looks good to me) by someone within Google, before it could be submitted. I term this "gating" code review. Google's code review process is "lightweight", review tool-based code review - much like you'd do using a tool like Gerrit (the link has some explanation of the review workflow for those unfamiliar).

Lightweight tool-based code review is designed to provide benefits similar to pair programming in terms of extra eyes on the code, but without needing quite as close coupling. When writing code without someone else's eyes on it, it turns out that I introduce errors all the time that are subtle enough that they often get through tests. (Of course, that's biased - if they break the tests, I catch them before I try to submit them. :)

(And in one of my recent commits, my reviewer spotted that I'd unintentionally shadowed a variable, which had caused a test failure that was only caught by the later run of the CI system, because it occurred only in GPU code and I'd forgotten to run the GPU tests locally. His sharp eyes saved me from having to debug the test failure -- he spotted exactly the problem, just by looking carefully for 10 minutes.)

But code review can also slow things down in the short term. (Like, 10 minutes short term. :). The truth is that most of the time "spent" is actually earned back -- perhaps with a multiplier -- in reduced debugging time. But there can be problems when reviewers take too long, and as I note below, with managing very rapidly changing research code where you want to get some stuff committed quickly and keep moving. While it's clear to me that there's huge value in code review, I might do it differently in academia.

Concrete suggestion: When there are multiple students working on a project, provide the infrastructure for them to do code reviews, but consider not making them "gatekeeping" reviews -- just advisory. (See, e.g., some organizational pitfalls of code reviews). In general, "lightweight" code review serves a few functions, and we should be careful to explicitly choose those functions we want in research:

- Maintaining stylistic / cultural coherence ("readability").

- Improving code quality.

- Giving programmers feedback for learning.

- Preventing bugs from reaching production.

- Keeping a team in sync with ongoing changes.

From an academic perspective, on most projects, I argue the focus should be on speed, education, and communication, and early detection of flaws that would fundamentally invalidate the research. From that perspective, supporting a form of non-gating code review may be an excellent way to balance things. I'm going to try this when I get back to academia, but I'd love to hear from others who've tried it. By non-gating, I mean post-commit review that provides feedback to the author, without being a stepping stone to getting additional code written.

(In many code review environments, the process of building upon an outstanding CL (change list) adds extra work: One usually patches in the pending CL into a new checkout of the repository and goes forward from there. It adds some amount of mental and layout juggling that's a distraction. I'm not convinced this cost is a good idea for standard research code.)

The focus of academic code reviews should probably be more narrow than, e.g., Google's fairly widely-scoped "make the code excellent" approach. Obviously, finding critical bugs is always important, but I think an approach that focuses on correcting one or two style issues, and/or showing the author one improved way to do things, is likely to be more appropriate for the academic environment. I would not like my students spending an hour commenting about missing periods at the end of documentation comments -- even though, for Go and in Google, I think it's a great policy that pays dividends for the 1000 other people who might have to read the docs generated from it. Rationale: Most academic code & code docs will be read by far fewer people and over a shorter period of time than industrial code, so biasing towards the write side is reasonable. I would like my students identifying that someone had used an n^2 algorithm on a dataset large enough to benefit from an n log n algorithm, or noting that 20 lines of handling different cases would be more simply expressed by a quick-and-dirty sort of three items.

Code reviews are only reasonable with good tooling and a culture that supports and welcomes them. As a cultural note, don't view code review purely as knowledge flowing from the experienced to the uninitiated: likely the biggest benefit is that it's just a different person taking a fresh look at the code. It's the exact same benefit we get from asking colleagues to review our papers before we submit them -- we eventually become blind to what we've written. Keep an open mind to the fruits of code review; we're all in the academic game to keep learning, and it's a great tool.

I suspect that GitHub's comments are a reasonable way to start for post-hoc commit review. It's not nearly as effective or intuitive as using Gerrit, but sometimes keeping it simple is better. Our group has tried a few of these approaches, and we're just on GitHub these days. YMMV - and I'd love to hear how others successfully manage this.

I suspect that GitHub's comments are a reasonable way to start for post-hoc commit review. It's not nearly as effective or intuitive as using Gerrit, but sometimes keeping it simple is better. Our group has tried a few of these approaches, and we're just on GitHub these days. YMMV - and I'd love to hear how others successfully manage this.

4. Don't forget that you're not a company: Release your junk!

In the midst of all this industrial-styled verbiage, don't forget that you're a researcher, not a production engineer. Release your code, even if it's crappy. You never know when someone might need it for their own research, and the world is strictly better when your research products are available for others to build on, even if you're a little embarrassed about the quality of the code. As a researcher, it's not your job to have the best thing ever: It's your job to be beaten later. Make it easy for people to beat you by using your work as a stepping stone. (They'll both cite you and thank you.)

Footnotes

1. By research code, I mean the kind of code produced as part of a longer-term, larger research project leading to one or more published papers, implemented by one or more Ph.D. or M.S. students. It's a separate and important question how to try to use some of these techniques in undergraduate education, but that's for another time.

Many thanks to Hyeontaek Lim, Vijay Vasudevan, and Matt Welsh for comments on draft versions of this document. Thanks to Jeremy Manson for pointing out google-java-format and errorpone, and Bryan Mills for noting -Weverything for clang. All errors, opinions, and saying-of-stupid-things are purely my own fault. And I assume that it's clear that while I'm spending the year at Google, this is all my personal opinion and take-away from the experience, not some official statement of any of the organizations I'm fortunate to be affiliated with.

Comments

Post a Comment